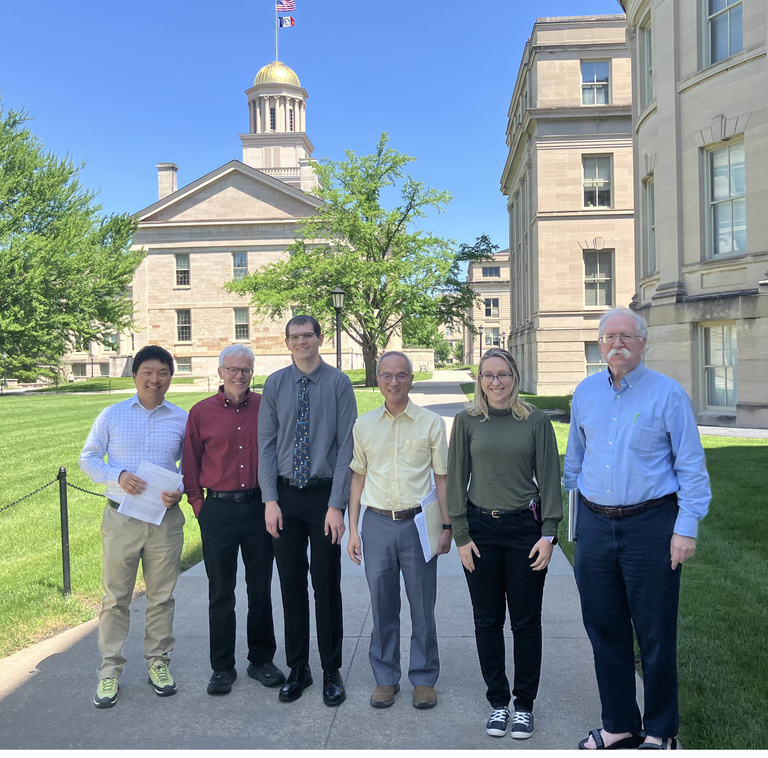

Congratulations to our PhD student, Max Sampson for successfully defending his dissertation. "Topics in Conformal Prediction and Causal Inference" on May 22, 2024. His committee included (L to R) Boxiang Wang, Joseph Lang, Max, Kung-Sik Chan (Chair), Emily Roberts (Biostatistics), and Luke Tierney.

Public Abstract: Machine learning, specifically deep learning models, has gained considerable popularity because of the flexibility they provide to answer different questions. One problem with these models is that they only provide a point estimate without any ``wiggle room”, or uncertainty quantification. For example, if we wanted to predict someone’s income based on their age and college education, we could use a neural network. Though, the output would only be a single number that would almost certainly be different than the truth. Instead of a single point estimate, we want to focus on sets that provide a range of plausible values. In this dissertation we develop two methods that provide prediction sets for many types of models, including deep learning models. We then develop a method to provide uncertainty quantification when what we are predicting has multiple dimensions, for example systolic and diastolic blood pressures. Finally, we extend some of these prediction methods from associational relationships to cause and effect relationships. For example, ``if you take 75 milligrams of aspirin, the aspirin will cause your fever to decrease between 2 and 3 degrees,” instead of, ``when people take 75 milligrams of aspirin, for whatever reason their fevers will decrease an average of 1 to 2 degrees.”

Regular Abstract: In this dissertation, the research focuses on uncertainty quantification to create prediction intervals using exchangeable data for regression in the conformal prediction framework. There are several existing conformal regression methods, however, they fail to account for multimodal errors without partitioning the data.

First, we introduce two methods that attempt to find the highest predictive density sets, one for a conditional homoskedastic error and one for a conditional heteroskedastic error. Both leverage existing methods for finding the highest predictive density sets, allowing the methods to be easily implemented. Simulation studies are included to demonstrate the effectiveness of the methods compared to existing methods in a variety of settings. We also establish theoretical asymptotic differences between the oracle highest density set and the estimated density set for homogeneous errors.

We then introduce hyperrectangular conformal prediction regions for $p$-dimensional responses for both point and quantile regression. We establish the finite sample coverage guarantees as well as asymptotic balance results. Extensive simulation studies are included to demonstrate how well the method works, and if the method achieves balance. Then we look at how well the method works compared with a Bonferroni correction in a real data application.

Lastly, we combine conformal prediction and conditional density estimation for individualized causal prediction intervals with a continuous treatment. We compare the conformal prediction intervals with unweighted na\"ive prediction intervals to demonstrate the advantages that the conformal prediction method has.